Top Data Science Tools to Stay Ahead in the Coming Years!

- archi jain

- Dec 26, 2024

- 4 min read

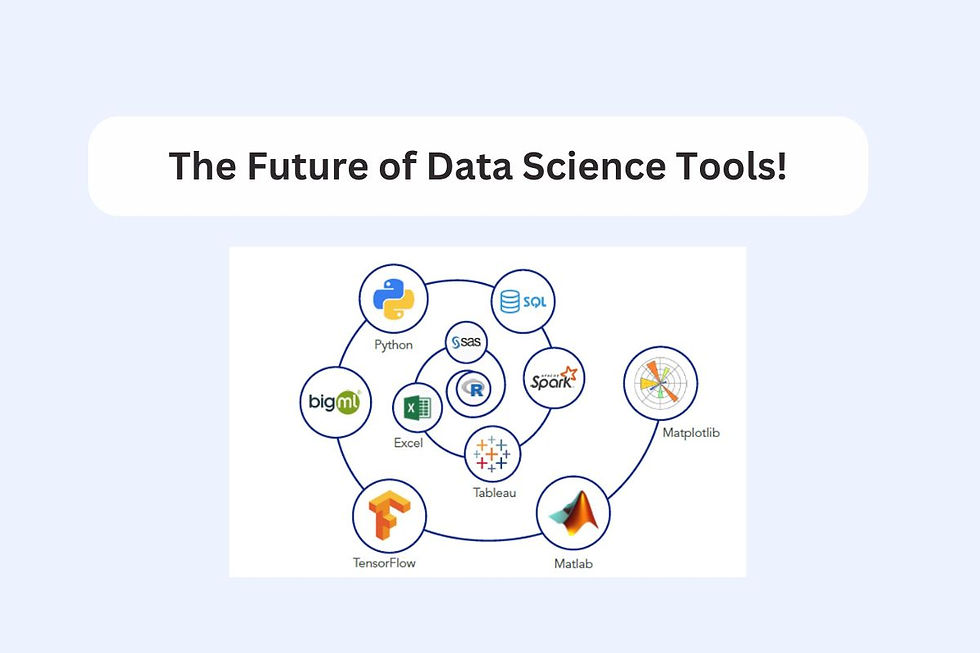

Data science is one of the fastest-growing fields, and with the increasing demand for data-driven decision-making across industries, the tools used by data scientists have become more advanced and powerful. Whether you're involved in machine learning, data analysis, or artificial intelligence, knowing the right tools can make a significant difference in your ability to process, analyze, and interpret data effectively. In this article, we’ll explore the top data science tools that will keep you ahead of the curve in the coming years!

Python: The Powerhouse of Data Science

Why is Python the Go-To Language for Data Science?

Python continues to be the most popular language in the data science ecosystem. Known for its simplicity and readability, Python allows data scientists to perform a wide range of tasks, from data manipulation to building machine learning models. The language’s extensive support for data analysis and visualization, along with libraries such as NumPy, Pandas, and Matplotlib, makes it the first choice for many professionals in the field.

Key Libraries for Data Science in Python

NumPy: A fundamental package for scientific computing in Python, providing support for large, multi-dimensional arrays and matrices.

Pandas: A library designed for data manipulation and analysis, especially for structured data.

Matplotlib: A plotting library used for creating static, animated, and interactive visualizations.

Scikit-learn: An essential machine learning library that helps in building predictive models.

R: A Language Built for Statistics

Why is R Preferred by Statisticians?

While Python dominates in general-purpose data science, R is specifically designed for statistics and data analysis. It is widely used in academic research and specialized fields like bioinformatics and econometrics, thanks to its comprehensive set of libraries and tools designed for statistical operations and analysis.

Powerful Libraries in R for Data Science

ggplot2: This library allows you to create elegant and customizable data visualizations.

dplyr: A powerful package for data manipulation that simplifies common data wrangling tasks.

tidyr: Used for cleaning and reshaping data into a tidy format.

Jupyter Notebooks: An Interactive Environment for Data Science

How Jupyter Makes Data Exploration Easier

Jupyter Notebooks is a highly effective tool for creating and sharing interactive documents that combine code, visualizations, and narrative text. This environment is particularly useful for exploratory data analysis (EDA) and is favored by data scientists for its ability to run live code alongside visual outputs.

Benefits of Using Jupyter for Data Science Projects

Supports multiple programming languages, including Python, R, and Julia.

Enables real-time code execution and testing, making it ideal for experimentation.

Great for sharing insights, creating documentation, and collaborating with teams.

SQL: The Bedrock of Data Management

Why SQL Remains Essential for Data Scientists?

No matter how advanced your data science skills are, SQL (Structured Query Language) remains an essential tool. It is the standard language for querying and managing relational databases. SQL helps you retrieve, filter, aggregate, and manipulate data, which is the foundation for performing data analysis and building models.

SQL's Role in Data Preprocessing and Cleaning

Extracting Data: SQL queries are used to retrieve raw data from databases.

Data Manipulation: Sorting, filtering, and joining data to create useful datasets.

Data Aggregation: Performing operations like SUM, COUNT, AVG, etc., to derive insights from data.

Apache Spark: The Engine for Big Data Processing

How Apache Spark Handles Big Data

Apache Spark is a powerful, open-source, distributed computing system used to process large datasets quickly. Spark’s ability to handle real-time data streaming and batch processing efficiently makes it a critical tool for working with big data. It can scale easily, handling data from a single server to thousands of machines.

Speed and Scalability with Apache Spark

In-memory computing: Spark’s ability to store intermediate data in memory rather than writing it to disk significantly boosts processing speed.

Real-time processing: Spark supports both batch and real-time data processing, making it an excellent choice for handling high-velocity data.

Distributed processing: Easily scalable to handle petabytes of data.

Tableau: Powerful Data Visualization Tool

The Importance of Data Visualization in Data Science

While analyzing data is crucial, presenting your findings in an understandable way is equally important. Tableau is a powerful data visualization tool used to create interactive and shareable dashboards. It simplifies the process of creating stunning visual representations of your data that help stakeholders understand complex data insights.

Why Tableau Stands Out in Data Visualization?

User-friendly: No programming skills are needed to create beautiful visualizations.

Interactive Dashboards: Users can interact with the data by filtering or drilling down into specific data points.

Integration: Supports integration with a wide variety of data sources like Excel, Google BigQuery, SQL databases, and more.

TensorFlow: The AI and Deep Learning Framework

Why TensorFlow is a Game-Changer for AI and Deep Learning

Developed by Google, TensorFlow is an open-source library for numerical computation that has become the standard framework for building machine learning and deep learning models. TensorFlow allows you to build complex neural networks and AI systems with relative ease, making it indispensable in fields like computer vision, speech recognition, and natural language processing (NLP).

Key Features and Benefits of TensorFlow

Deep Learning: Supports a variety of neural networks, including convolutional and recurrent networks.

Scalability: TensorFlow can be deployed across multiple devices, from mobile phones to large-scale clusters.

TensorFlow Lite: Enables mobile app developers to run machine learning models on smartphones and IoT devices.

How to Gain Practical Knowledge in Data Science

As the demand for skilled data scientists grows, gaining hands-on experience with industry-standard tools becomes increasingly essential. If you're aiming to build a strong foundation in data science, focusing on practical learning through structured programs can help you gain valuable skills.

Many professionals enhance their proficiency by participating in a Data Science course in Indore, Bhopal, Gurgaon, and other locations in India. These courses are designed to provide in-depth training in the core tools and techniques that data scientists use daily, from programming languages like Python and R to machine learning algorithms and data visualization tools like SQL, Spark, and Tableau.

Conclusion

The world of data science is fast-paced and continually evolving. Mastering the right tools is crucial for data scientists to stay relevant and successful in this field. Whether you're analyzing data with Python, building machine learning models with TensorFlow, or visualizing insights with Tableau, the tools mentioned in this article are key to achieving excellence in data science. By staying up-to-date with these powerful tools and technologies, you can ensure that you remain competitive and ahead of the curve in the coming years.

Comments